"What reinforcement we may gain from hope;

If not, what resolution from despair."John Milton, Paradise Lost, 1667, Book 1

"A small number of rules or laws can generate systems of surprising complexity... The rules or laws generate the complexity and the ever changing flux of patterns that follows leads to perpetual novelty and emergence."John Holland, Emergence, 1998, Ch 1

Classifiers, Iterated Function Systems and Lindenmeyer Systems form part of a range of complexity techniques known as Production Systems. Here we shall introduce these ideas and see how they can be used to allow systems to develop over time in a contextual way. A production system is a set of rules that trigger each other to produce action in their environment and as such could be seen as limited models of how cells, organisms or brains work. The general nature of these models allows them to be applied in many different forms.

An iterated system departs from the linear input ![]() process

process ![]() output chain

common to most scientific thinking and loops the output back into the input. Thus

the same process occurs repeatedly (recursively). As the process can modify

the system variables this allows the results to cover a wide range or to map

out a path or orbit in state space. Fractal equations like the Mandelbrot and Julia sets

are systems of this type. In IFS Fractals the system consists generally of a

set of mathematical equations (usually contractive affine transformations)

one of which is chosen (usually probabilistically) for each iteration. This process

generates such famous constructions as the snowflake (illustrated),

Sierpinski triangle and Barnsley fern.

output chain

common to most scientific thinking and loops the output back into the input. Thus

the same process occurs repeatedly (recursively). As the process can modify

the system variables this allows the results to cover a wide range or to map

out a path or orbit in state space. Fractal equations like the Mandelbrot and Julia sets

are systems of this type. In IFS Fractals the system consists generally of a

set of mathematical equations (usually contractive affine transformations)

one of which is chosen (usually probabilistically) for each iteration. This process

generates such famous constructions as the snowflake (illustrated),

Sierpinski triangle and Barnsley fern.

We can say that in general IFSs are the attractors that emerge when assembled from small copies of themselves, using combination of translations, rotations, and scalings. They exhibit strong self-similarity. It is the 'choice' between transformations aspect of IFS that distinguishes them from normal linked equations (although conditionals can also be inserted into fractal equation evaluation - e.g. Fractint 'formula'). IFS systems are used extensively in data compression applications (the IFS rules form a 'seed' that concisely encodes a picture).

To make the system more flexible IF... THEN decisions can be added and in this

form it approaches that of expert systems where only part of the 'rule book' can be

implemented at any one time step. L-Systems thus add context to the IFS

production scheme. Here we have a list of transforms between symbolic components.

For example we may say component A goes to B (IF A THEN B), and B goes to AA.

Applying these rules in turn to an initial seed (say A), we get the growing sequence:

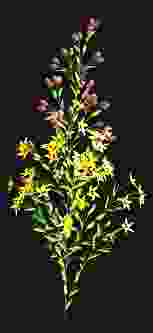

These are formal context-sensitive grammars consisting of Variables, Constants, Rules and Initialisations which specify a set of rewriting rules. More complex versions of these rules are often designed to incorporate geometric elements (Turtle graphics or LOGO), which can allow decisions such as "IF there is an A to the left AND a B to the right MOVE C steps forward ELSE ROTATE D degrees" to generate pictures. These are further improved if random noise (or environmental sensitivity) is added to avoid mechanical regularity. This process can give rise to amazingly realistic creations of natural organic forms as seen in this example of a plant. These forms of directed building block recombination provide methods of growth or development, ways of exploring state space, the space of possible structures.

Both IFS and L-Systems are static sets of rules in essence, but we can look to evolving them by the techniques used in Genetic Algorithms. GAs generally use a two state alphabet of 1 and 0 to specify genotype components, but we can generalise this to include also a 'don't know' character (#), By doing this we can have a ternary (3 state) string that specifies more than one possible genome or rule. For example for a 4 bit string, #### specifies all of state space, 1### all genomes beginning with a 1. Note that a single specified character partitions the state space in half (it will match half the possible genomes), this is the order or specificity of the rule, thus a rule of form #10# has order 2 and 1011 has order 4. Each rule is called a schemata and the set of rules (partitions over state space) the schema.

The most important aspect of schemas is Holland's 'Schema Theorem' which states: "Schemas whose average fitness remains above the population average will receive exponentially increasing numbers of samples over time". This means that the production system will operate in an implicitly parallel way, searching state space, and will converge to an optimum state (but not necessarily the global optimum). This parallelism is a form of weak cooperation, in that bit positions that work well together can be said to cooperate by association, so this methodology incorporates epistatic or synergistic associations between the bits (i.e. it takes into account trade-offs or compromise between the components).

Classifiers add the concept of schemas to the production rules, this enables us to generalise rules over the environment space and arrange to mutate or discover new rules with which to explore this space. These systems are mid way between non-symbolic systems such as Neural Networks and symbol based systems such as Expert Systems and L-Systems. Central to the principle is the idea of a 'broadcast language' where each matched rule issues a general message (its output) to the environment (similar to how a gene 'broadcasts' a protein in a cell).

In these systems the environment is assumed to be sensed and each sensor codes a bit in environment space - rule space is thus mapped one-to-one onto this input space. While just creating different rule combinations allows us to explore rule space, it does not however tell us anything about the efficiency of the rules or the messages being used (they are thus a form of random collision model). In the GA world an additional layer is added to evaluate the fitness of each choice and to determine which genomes to discard and which to modify. For a practical classifier system some procedure of this type (called 'credit assignment or allocation') will also be necessary.

Various methods to evaluate rule schemes have been tried but, unlike GAs which inhabit their own world, production systems relate to an external environment and thus any feedback regarding effectiveness must come from that source. Reinforcement Learning is the field that studies these ideas and indirectly includes both classifier systems and neural networks.

Two general forms of feedback are possible. In the first, the environment will give the 'correct' answer (rather like supervised learning in NNs or teachers), thus changes can be made directly to the system to better approximate the answer. Generally however the environment is not that specific and an answer simply of good or bad (or even of no response at all unless wrong) is more likely.

The general evaluation addition needed to form a simple classifier system (SCS) is the 'bucket brigade'. In this each rule has a strength and if it matches an environmental input (e.g. input 1011, rule 1### matches, rule 0#10 does not) it takes its action. If this results in a correct response from the environment then the rule strength is increased. Generally there are many rules that match any input, so they all post their proposed responses to a 'message board' which also contains the environmental messages. The winning rule is that with the highest strength times specificity (so that a more specific rule takes precedence over vague ones) with stochastic tie breaks. The best rules are mutated in some way to form new rules allowing the system to evolve a good set of rules. An important feature of classifiers is that they are strongly cooperative (they form chains of rules that are mutually fitness affecting and beneficial if successful - the 'bucket brigade')

Two main 'schools' of classifier thought are evident. In the Michigan approach each rule is treated as independent, which allows fast evolution but gives poor problem solving ability ('parasite' rules invade the population - a problem also seen in social individualism !). The Pitt approach treats the set of rules as a whole, having populations of different sets of rules which then compete. The winner here creates a more powerful set of cooperative rules but (like many collectives) is slow to find solutions and wasteful of resources. In these systems 'bid costs' and 'existence costs' can be used to penalise rules that are too general (always matched) or useless (wrong actions), which are eventually are then replaced.

Due to the polarised nature of these two approaches alternatives have been proposed. One, Organized Classifier Systems (OCS) is based on the concept of transaction costs from economic theory. Here 'individuals' have high transaction costs (due to mistrust, duplication of work) but also flexibility, whilst 'collectives' have low costs (contracts) but suffer constraints. The OCS tries to balance these dynamic/static elements to gain a compromise ('edge of chaos') of optimum balance. We can regards the compromise 'organizations' here as variable length chromosomes, so a population of modular rule systems is possible.

Another approach is the XCS system, where strength is replaced by accuracy, allowing optimized populations of rules to arise in certain circumstances, and by using a 'niched multiobjective genetic algorithm' these are applicable to multiple payoff landscapes also. This idea also allows planning to be incorporated (animat behaviour, applicable to situated robotics).

The schemata considered up to now did not contain any explicit memory (the strength was an implicit memory but not used in the matching process), each message was interpreted in isolation from any previous system states. We can however generalise the idea of schemata and this brings us to the Finite State Machine. Like the classifier this responds to input messages and generates an output. The rule or 'transition table' specifies for each input combination what the output state should be, and this can be described as a logical function (normally using bivalent logic, but we can also have Fuzzy State Machines that use fuzzy logic). The inputs here can also be generalised to include 'tags' (identification strings) allowing machines (or 'agents') to recognise each other and perform type specific interactions.

We can incorporate memory into these multistate machines and if we do so then the output depends not only on the current input but on an history, which may be shallow (just the previous input) or rich (a whole life experience). In this form of decision unit we approach the Complex Adaptive System and ALife types of scenario. Despite the complexity of these components however we still usually assume a fixed population with an external method (the GA) for creating or deleting members.

CGPs are a new formulation (also by Holland) of classifier systems, intended to generalise to modelling all forms of systems. In this formulation, rules (or procedures) are allowed to generate (or produce) new states within a constrained (limited scope) environment. Thus CGPs can be viewed as methods to compress global state space, ways of coding rules that can create all the states needed in any particular representation (e.g. a chess game or a Cellular Automata).

Classifier outputs cannot normally create new classifiers, that is the role of the GA. But by relaxing that restriction we can arrive at a more general form of broadcast system which can create its own parts. In these procedures the rules can specify not only actions on the environment but actions on the agent structure itself. This variable form of constrained generating procedure (cgp-v) is potentially a universal constructor as well as a universal computer, since no external control or 'boss' component (like the GA) need now exist. Such an autonomous, free form and powerful agent is, needless to say, well beyond our current practical capabilities !

Whilst the simpler forms of production system are easy to use and to illustrate, these types of system become extremely complex as we relax the constraints and add new features. We are dealing here with infinite areas in state space, very high dimensional systems whose analysis is difficult and applications as yet unclear. Whilst in theory they have tremendous potential to model intelligent processes, they are at the cutting edge of complex systems research, especially when combined with the other complexity formalisms mentioned herein.

The ability to develop systems that can learn, that can modify themselves, that can act on their environment and also generate novelty opens up a future of intelligent machines with all the philosophical and technical problems that this brings. Whether this research direction will bear fruit or not is less important than the opportunity it gives us to understand the complexity of our own planet and the emergent creative interplay between diverse agents and diverse situations that can arise in strongly cooperating models.